Prototyping Bakery Concepts with AI

Project Type: AI Exploration

Role: Designer

Tools: Adobe Firefly, Illustrator, Photoshop, Adobe Stock

Timeline: Fall 2024

Overview

This project explores how generative AI can be used as a visual prototyping tool in the food and beverage space. I created Mochi Time, a fictional mochi donut shop preparing for a Halloween-themed promotion, to test whether AI could render realistic product visuals without the need to bake physical samples. A mochi donut is a ring-shaped pastry made with glutinous rice flour, known for its chewy texture and bouncy bite. Using Adobe Firefly, I translated concept sketches into high-quality images that captured the texture, color, and personality of each donut design.

The final visuals were assembled into a promotional poster, allowing for rapid exploration of different toppings, glazes, and character concepts. This process highlights how AI can streamline early-stage product development while supporting playful, creative experimentation.

Donut Where to Start

Problem

Creating seasonal themed baked goods can be a time-consuming process, as each concept often needs to be physically baked and refined before it can be evaluated or marketed. It is also difficult to develop promotional materials for upcoming offerings when photographing real products is not feasible during the early concept phase, especially if the specialty donuts have not yet been produced. This makes it challenging to test ideas, visualize presentations, or build anticipation ahead of launch.

Proof of Concept

Goal

The goal of this project is to explore whether generative AI can be effectively used to prototype photo-realistic and convincing themed baked goods that do not appear artificially generated. This includes testing the AI's ability to visualize the overall look and feel of each concept, experiment with color palettes and toppings, and simulate presentation styles that could guide both product development and promotional design.

Baking Up Some Ideas

Ideas

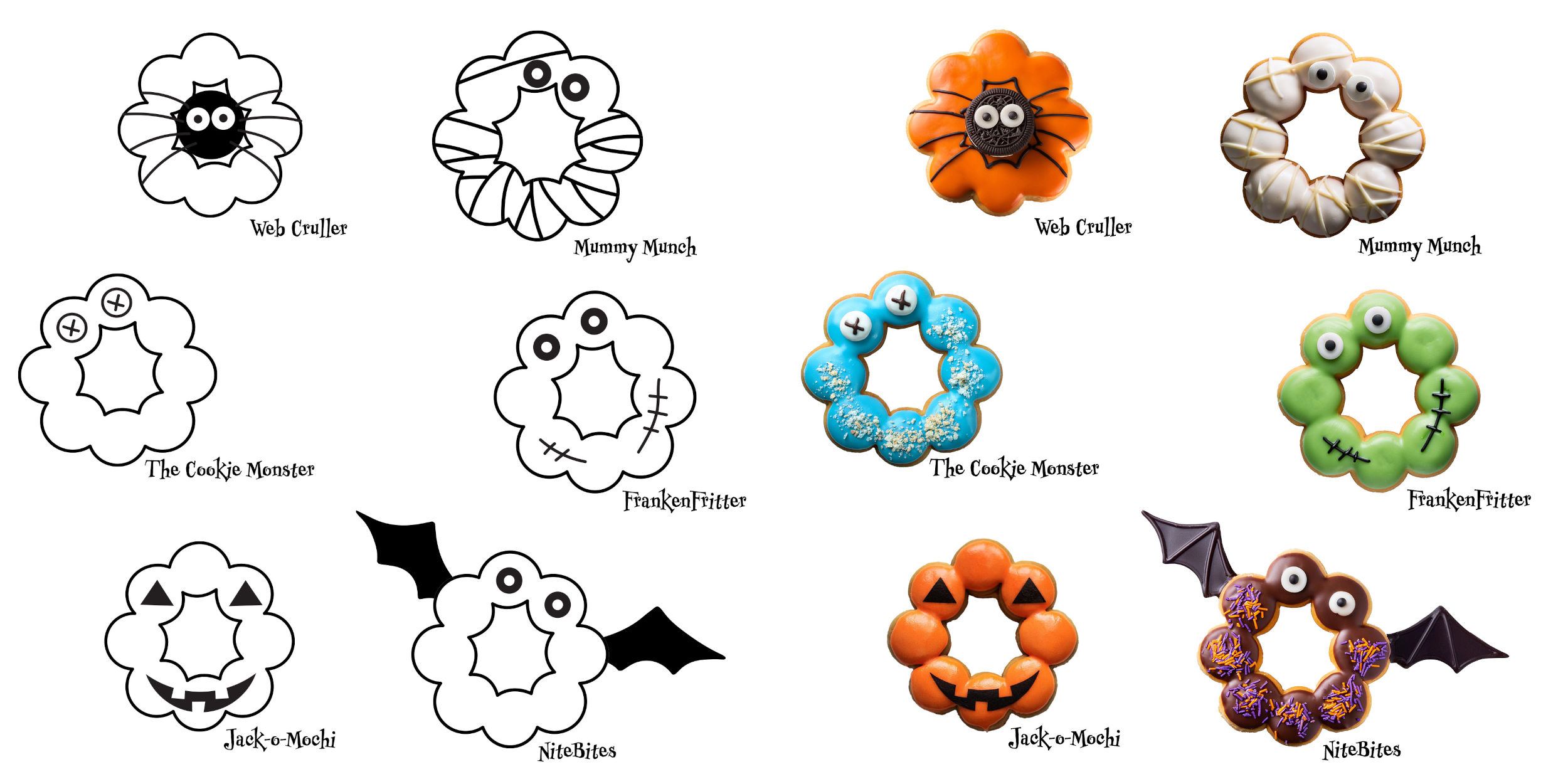

The project began with the creation of six Halloween-themed mochi donut concepts for Mochi Time. Each design was imagined as a playful character with a seasonal twist and a pun-based name that combined Halloween elements with the shape and charm of mochi donuts. These conceptual ideas served as the creative foundation for the visual exploration that followed.

Donut Concepts:

- Cookie Monster - Blue frosting dusted with cookie crumbs and topped with candy eyes

- FrankenFritter - Green frosting accented with chocolate stitches and a Frankenstein-inspired look

- Web Cruller - Orange frosting with a black Oreo cookie spider in the center, complete with candy eyes and chocolate legs

- Jack-o-Mochi - Orange frosting with chocolate jack-o'-lantern eyes and a carved smile

- Mummy Munch - White frosting wrapped in white chocolate lines to resemble a mummy, with candy eyes peeking through

- NiteBites - Chocolate frosting with black bat wings and dusted with colorful sprinkles

From Sketch to Snack

Process

After developing the initial donut concepts, I explored how to bring them to life using AI-assisted image generation. The process involved trial and error, prompt refinement, and visual iteration to achieve realistic results that remained true to the original ideas.

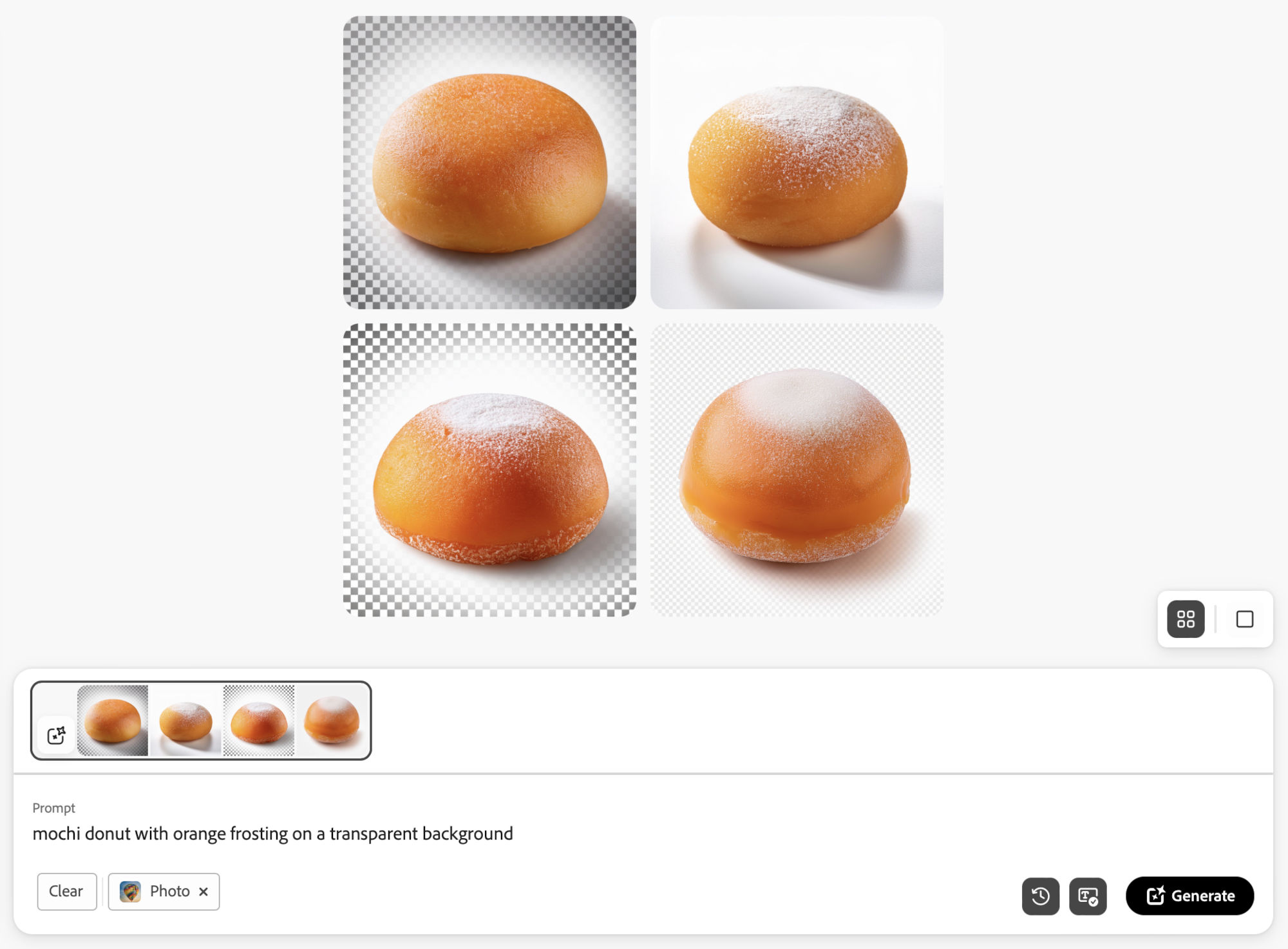

Text Prompt Trials

I began by experimenting with the text-to-image functionality in Adobe Firefly using only text prompts, without any reference images. I quickly discovered that the tool did not recognize the phrase "mochi donut," often rendering regular donuts without the distinctive ring shape made from connected mochi balls. This revealed the limitations of relying solely on text.

Simple Shape Reference

To provide more visual context, I created a simple black and white outline of a mochi donut to use as a composition reference image in Adobe Firefly. While this helped with basic shape recognition, the AI frequently misinterpreted the design by placing candy eyes or spiders on each individual mochi ball and introducing random elements outside the donut.

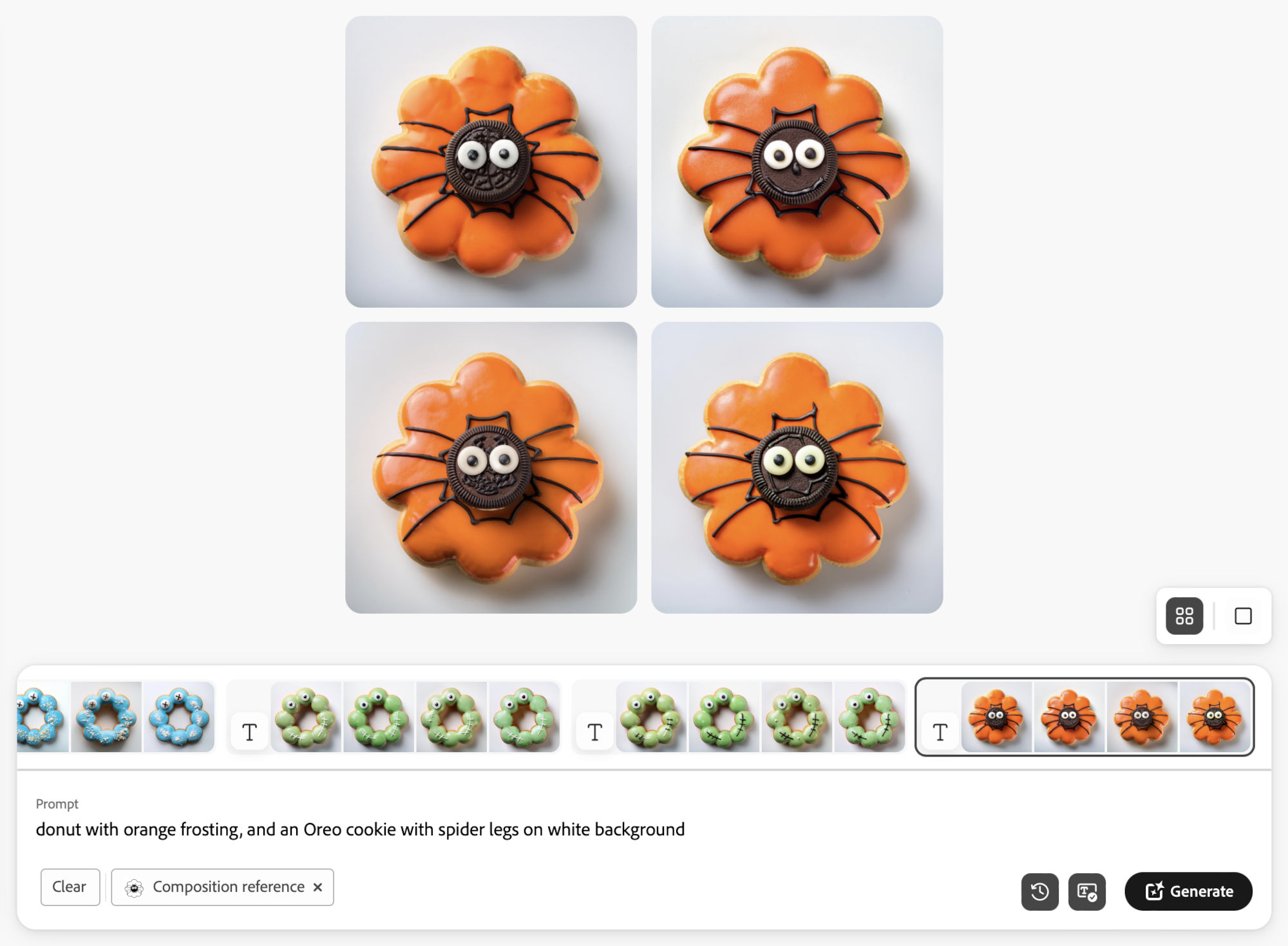

Image Reference and Prompting

To improve accuracy, I created detailed black and white vector graphics in Illustrator to guide Adobe Firefly’s image generation. Paired with focused prompts such as "Donut with light blue frosting, two white eyes with X as pupil, dusted with cookie crumbs," these references produced more consistent results. I iterated on frosting colors, eye shapes, and toppings until each donut matched the intended look and personality.

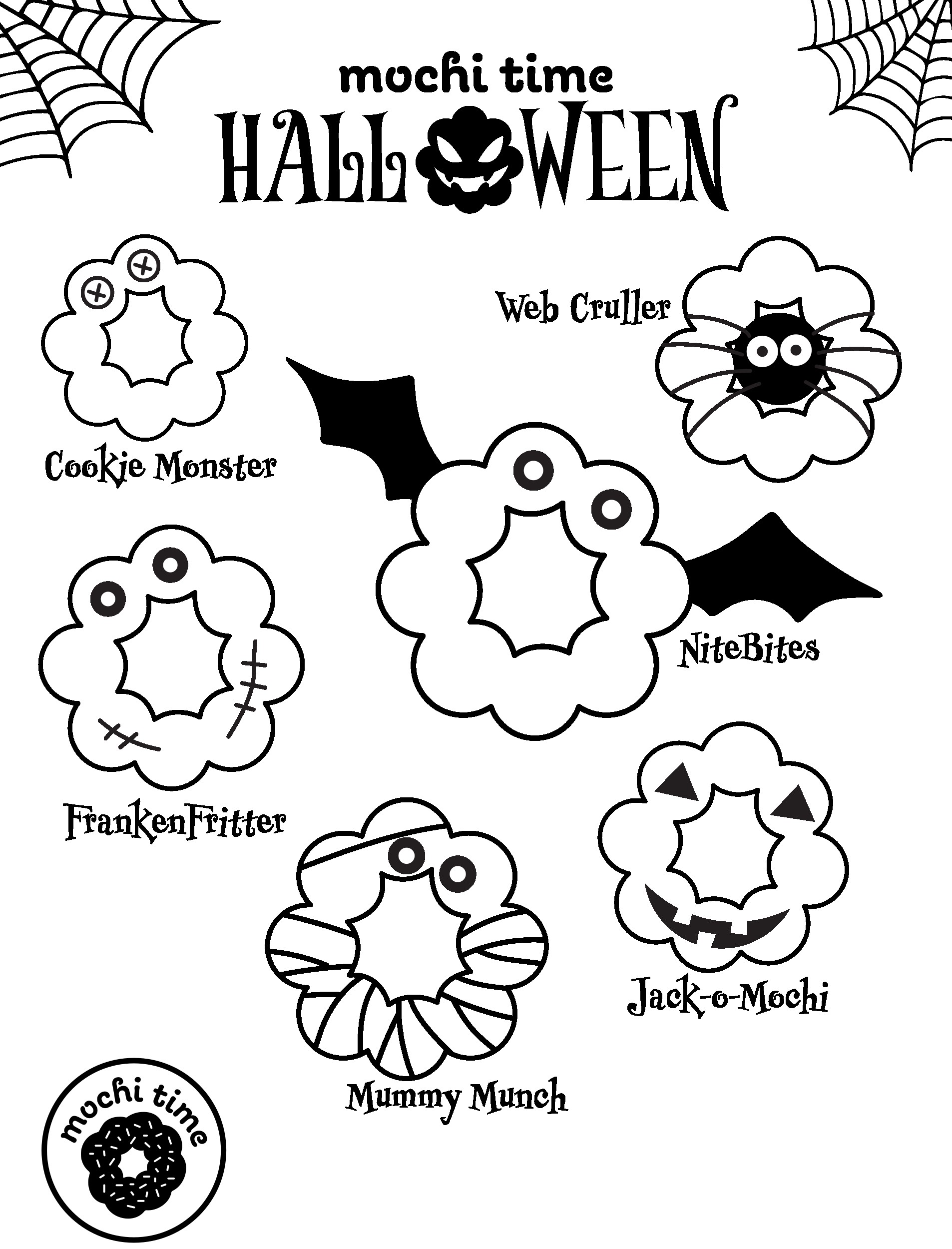

Final Image Assembly

After generating the visuals, I used Photoshop to isolate each donut and, in some cases, merged features from multiple versions to create the strongest final image. This allowed for subtle adjustments while maintaining realism. I then designed the final poster in Illustrator, incorporating the rendered donuts with branding, stock imagery, and Halloween-themed typography.

The Sweet Reveal

Final Design

The final poster highlights the transformation from concept to photorealistic design. The black and white version features the original vector outlines, while the color version showcases AI-generated visuals that bring each donut to life. I also created mockups of the poster to demonstrate its potential in real-world settings.

Food for Thought

Reflections

Overall, I was very impressed with the images rendered by Adobe Firefly. They were realistic enough to resemble actual food items, and I didn’t even need to break out my apron. Providing a reference image that matched my intended composition was essential for guiding the AI and making sure the text prompts were interpreted correctly. Once that foundation was in place, the process became much more consistent and effective.

That said, it was still occasionally frustrating when similar prompts produced noticeably different results from one generation to the next. This variability required some trial and error, and in several cases, I used Photoshop to merge desirable features from multiple outputs into a final, cohesive image.

Ultimately, I believe the goal of the project was achieved. I was able to use generative AI to prototype realistic, themed baked goods without having to produce physical samples. The process was both efficient and creatively flexible, making it a viable method for visualizing seasonal food concepts in early development or promotional planning stages.